Observability

Back to glossaryObservability: A Complete Guide to Understanding, Implementing, and Scaling IT Visibility

What Is Observability?

Observability is a concept rooted in control theory that has evolved to become a cornerstone of modern IT operations. In its most fundamental form, observability is the ability to infer the internal state of a system based on the data it produces—namely, its outputs, such as logs, metrics, and traces.

In software systems, observability refers to how well internal states of a system can be understood from the outside by examining:

- Logs – Text-based records of discrete events and processes within a system

Metrics – Numerical values that represent the performance or state of specific components

Traces – Records that follow the path of a request through various services in a distributed system

This data enables teams to detect, investigate, and resolve issues quickly, reducing the risk of service disruptions and ensuring optimal performance for end-users.

Learn more with this high-level overview of what observability is and why it matters.

A Shift from Traditional Monitoring

While monitoring answers the question “Is the system working as expected?”, observability goes further, enabling teams to answer “Why is it not working?”

| Monitoring | Observability |

| Tracks known failure modes | Identifies unknown issues and root causes |

| Reactive | Proactive and diagnostic |

| Focus on metrics only | Ingests metrics, logs, traces (telemetry) |

| Answers “what happened?” | Answers “why did it happen?” |

This shift is critical in modern, cloud-native, and distributed environments where traditional monitoring tools may fall short in providing the visibility and diagnostics required to ensure system resilience.

Definition Summary

Observability is the practice of collecting, correlating, and analyzing telemetry data from distributed systems to gain deep visibility and actionable insights into system behavior and health.

As organizations scale their IT infrastructure and adopt microservices, containers, and multi-cloud strategies, observability becomes a strategic enabler of uptime, performance, and digital transformation initiatives.

Why Is Observability Important in Modern IT?

In today’s dynamic and distributed IT environments, observability is no longer a luxury—it’s a necessity. The growing complexity of digital systems, coupled with rising user expectations and aggressive SLAs, makes it imperative to understand not only what’s happening within a system but also why and how it is happening.

Key Reasons Why Observability Matters

- Proactive Incident Detection

- Observability enables early detection of anomalies before they turn into outages

- It empowers teams to investigate potential issues with minimal latency

- Faster Mean Time to Resolution (MTTR)

- Deep visibility shortens the incident lifecycle from detection to remediation

- Reduces costly downtime and improves user satisfaction

- Support for Complex, Distributed Systems

- Modern architectures such as microservices, containers, and serverless demand correlated telemetry for effective troubleshooting

- Observability unifies signals from diverse systems into a coherent narrative

- SLA and Compliance Management

- Enables tracking of service-level indicators (SLIs) and objectives (SLOs)

- Helps ITOps leaders or managers demonstrate compliance and accountability to stakeholders

- Empowered Teams and Culture of Ownership

- Developers and SREs can self-diagnose and own their services’ performance

- Enhances cross-team collaboration through shared dashboards and insights

- Business Continuity and Digital Experience

- Ensures digital products perform reliably across channels

- Minimizes customer churn due to performance degradation or outages

Observability as a Strategic Asset

For tech leaders and IT Operations managers, observability offers strategic benefits:

| Strategic Objective | Observability Contribution |

| Reduce downtime | Early alerts and root cause identification |

| Improve IT efficiency | Streamlined workflows, fewer tools, automated insights |

| Optimize resource planning | Real-time metrics support capacity and usage forecasts |

| Support digital transformation | Ensures infrastructure reliability and agility |

| Control costs | Helps eliminate tool redundancy and reduces incident overhead |

By delivering real-time, actionable insights across environments, observability supports both the technical goals of IT teams and the strategic goals of the organization, such as innovation, customer satisfaction, and revenue continuity.

Observability vs Monitoring: What’s the Difference?

While often used interchangeably, observability and monitoring serve distinct purposes in modern IT operations. Understanding the difference is crucial for building resilient systems and choosing the right tooling strategy.

Monitoring: Measuring Known Conditions

Monitoring is the process of collecting predefined sets of metrics and events to evaluate system performance. It’s largely reactive, designed to alert teams when thresholds are crossed or components become unavailable.

Traditional characteristics of monitoring include:

- Predefined dashboards and alerts

- Static thresholds

- Focus on known failure scenarios

- Suitable for stable, monolithic environments

Example: Monitoring may alert you that CPU usage has exceeded 95%—but it won’t explain why that happened.

Observability: Diagnosing the Unknown

Observability, on the other hand, is a proactive and diagnostic discipline that focuses on understanding the why behind system behavior by analyzing telemetry data such as metrics, logs, and traces. It supports complex, dynamic systems where failure modes are unknown or unpredictable.

Observability allows to:

- Explore emergent behavior in real-time

- Ask ad hoc questions and get meaningful answers

- Correlate data across services, layers, and infrastructure

- Uncover the root cause of incidents without prior alert definitions

Example: Observability helps trace a performance drop to a misconfigured microservice introduced in the latest deployment.

Observability and Monitoring: Side-by-Side Comparison

| Feature | Monitoring | Observability |

| Primary Goal | Alert on known issues | Investigate unknown issues |

| Focus | System health | System behavior |

| Data Types | Metrics (primarily) | Metrics, logs, traces (telemetry data) |

| Use Case | Threshold-based alerting | Root cause analysis, debugging |

| Approach | Reactive | Proactive and diagnostic |

| Adaptability | Limited to predefined conditions | Dynamic, flexible querying |

| Tooling Output | Dashboards and alerts | Insights and correlations |

Read this article from Centreon for a more in-depth exploration of the difference between observability and monitoring, including real-world examples.

Complementary Capabilities

Rather than replacing monitoring, observability enhances and expands it. Monitoring is a necessary part of the observability stack, especially for uptime performance and alerting. However, true observability:

- Supports exploratory analysis during incidents

- Bridges visibility gaps in distributed architectures

- Enables continuous improvement by uncovering latent system weaknesses.

For IT teams and tech Leads who need 100% visibility across infrastructure, and IT or ITOps managers who require system-level assurance for SLA reporting and optimization, observability provides a depth of insight that traditional monitoring alone cannot deliver, especially in case of use of several IT monitoring platform, source of blind spots.

The Three Pillars of Observability

Observability is built on a foundation commonly referred to as the three pillars: metrics, logs, and traces. These telemetry signals provide complementary perspectives on system behavior and form the core of any observability strategy.

1. Metrics: The Quantitative Lens

Metrics are numerical values that represent system state or performance over time. They are highly efficient, aggregatable, and ideal for alerting and trend analysis.

Examples include:

- CPU and memory usage

- Request throughput and latency

- Disk I/O and network utilization

- Number of active sessions or users

Key benefits:

- Lightweight and fast to collect

- Ideal for dashboards and threshold-based alerts

- Useful for capacity planning and SLA monitoring

Metrics are the starting point for most performance investigations, especially when monitoring KPIs such as availability or MTTR.

2. Logs: The Narrative Context

Logs are timestamped, text-based records that describe what happened within a system or application. They are often unstructured or semi-structured, and provide contextual information about events.

Examples include:

- Authentication success/failure logs

- API call logs with request payloads

- Application-specific debug messages

- System errors and warnings

Key benefits:

- Rich, detailed storytelling of events

- Essential for troubleshooting and auditing

- Can be parsed and analyzed for patterns

Logs answer the question “what exactly happened?”, offering invaluable support in root cause analysis and security investigations.

3. Traces: The Journey Map

Traces capture the path a request takes across multiple components in a distributed system. They are essential in microservices architectures, where a single user action might span dozens of services.

Each trace consists of a series of spans, each representing a unit of work—like a database call or API request.

Key benefits:

- End-to-end visibility of transactions

- Pinpoint bottlenecks and performance regressions

- Supports user experience optimization

Traces answer the question “where did the problem occur?” in complex, service-oriented environments. Tracing allows IT teams to follow the journey of a request across distributed services—capturing every call, span, and dependency. This granular visibility is essential not just for performance optimization but for reconstructing the precise conditions of an incident. Understanding the full request path helps accelerate debugging processes by narrowing down the scope of potential root causes, especially in microservices-based environments.

Unified Observability Through Telemetry

When combined, metrics, logs, and traces provide a 360-degree view of system behavior. This triangulation allows IT and development teams to:

- Correlate symptoms (e.g., a spike in errors) with causes (e.g., a misconfigured service)

- Detect anomalies quickly, even without predefined rules

- Reduce time spent switching between tools and data sources

In advanced observability stacks, these telemetry sources are collected, stored, correlated, and visualized within a single interface for maximum usability and insights.

Summary Table: The Three Pillars of Observability

| Pillar | Format | Purpose | Strengths |

| Metrics | Numeric series | Quantitative health indicators | Fast, alerting-friendly, scalable |

| Logs | Text records | Descriptive event data | Rich context, detailed errors, user behavior |

| Traces | Hierarchical paths | End-to-end transaction view | Dependency mapping, pinpoint latency issues |

Each pillar contributes uniquely to a complete observability strategy. Together, they empower IT teams to observe, understand, and improve modern IT systems.

Observability in DevOps and SRE

Observability plays a critical role in enabling DevOps practices and Site Reliability Engineering (SRE) by offering the visibility, diagnostics, and confidence required to deploy code faster, safely, and continuously.

In both disciplines, success is measured not only by system uptime but by the ability to detect and resolve issues early, automate response workflows, and minimize user impact. Observability provides the data-driven foundation for all of this.

The DevOps Perspective

DevOps teams are responsible for building, testing, releasing, and monitoring applications—often within CI/CD pipelines (Continuous Integration / Continuous Delivery) that automate and accelerate code deployment. Observability enhances DevOps by:

- Enabling shift-left debugging: Developers can investigate system behavior during development and staging.

- Reducing Mean Time To Identify (MTTI): Easier identification of failed deployments or performance regressions.

- Supporting blue/green (dual-production environments) and canary (progressive rollout to a subset of users) deployments: Real-time metrics and logs reveal impact before widespread rollout.

With observability in place, DevOps teams can release more frequently while maintaining control and reliability.

Observability also supports continuous delivery by providing real-time feedback loops. When developers push code changes, telemetry data offers immediate insight into stability, performance, and service integrity—reducing the risk of silent failures reaching production. In this way, observability fosters trust and autonomy among software engineers, accelerating innovation without compromising reliability.

For more insights on how observability empowers DevOps workflows and accelerates release confidence, read Centreon’s guide to observability in DevOps environments.

The SRE Perspective

SREs focus on maintaining service reliability and enforcing SLOs (Service Level Objectives). They depend on observability to:

- Define and monitor SLIs (Service Level Indicators)

- Automate incident response and postmortems

- Detect early signs of failure through anomaly detection

- Build self-healing systems using observability signals

Observability empowers SREs to shift from reactive firefighting to proactive reliability engineering.

Key Observability Use Cases in DevOps/SRE

| Use Case | Observability Impact |

| CI/CD pipeline validation | Detect regressions, failed deployments, environment issues |

| Performance testing | Measure real-world performance under load |

| Rollback and recovery | Diagnose causes and impact of bad releases |

| Service ownership & accountability | Enable developers to monitor their own code in production |

| Error budgeting | Track SLO violations to inform release decisions |

Observability as a Cultural Enabler

Beyond tooling, observability also fosters a culture of ownership, trust, and transparency, which are core tenets of DevOps and SRE:

- Developers can explore production behavior without gatekeeping

- Teams share a common telemetry layer and understanding of health

- Incident resolution becomes collaborative and data-driven.

By adopting observability early in the development lifecycle, organizations reduce risk and align their operations with agile, customer-centric delivery.

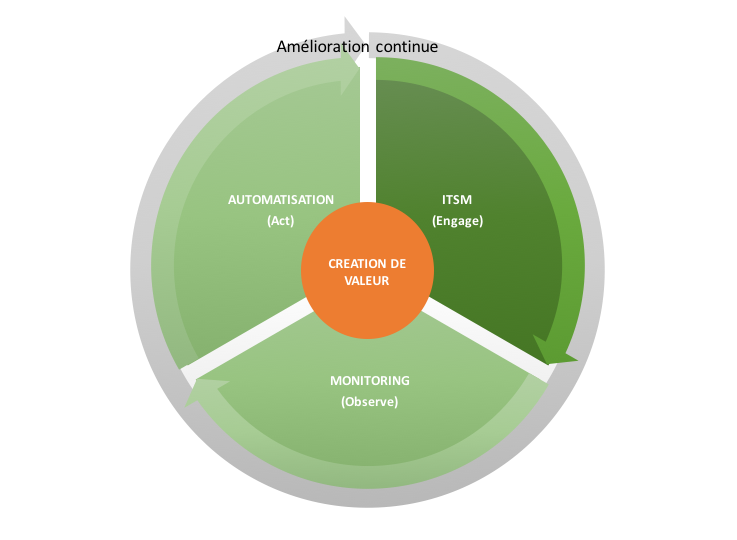

Observability in ITOps

As digital systems grow more distributed and complex, IT Operations (ITOps) teams are under increasing pressure to maintain service reliability, meet SLAs, and ensure smooth day-to-day IT delivery. Observability provides ITOps with the visibility, control, and intelligence needed to operate critical systems with confidence.

Unlike DevOps, which focuses on development and release velocity, ITOps is charged with ensuring stability, availability, and long-term performance—especially across heterogeneous, hybrid, or legacy environments.

Key Benefits of Observability for ITOps

1. Operational Visibility Across the Entire Stack

- Monitor infrastructure, applications, services, and dependencies from a single pane of glass.

- Detect performance degradation, failed components, or configuration drifts before users are impacted.

2. Incident Prevention and Root Cause Analysis

- Use historical telemetry and real-time alerts to detect patterns that signal potential issues.

- Accelerate incident response with contextual correlation across logs, metrics, and traces.

3. SLA Tracking and Compliance Reporting

- Align observability dashboards with business-critical SLIs and SLOs.

- Provide stakeholders with clear visibility into uptime, latency, and performance metrics.

4. Tool Consolidation and Process Efficiency

- Replace fragmented monitoring tools with unified observability platforms.

- Streamline workflows across NOC, SysAdmin, and infrastructure teams.

5. Support for Hybrid and Legacy Environments

- Observability solutions integrate with both modern cloud-native platforms and legacy systems.

- Ensure consistent visibility during digital transformation without losing control of existing systems.

For ITOps Managers, observability is not just a technical asset—it’s a strategic enabler of IT continuity, accountability, and cost control.

How Observability Empowers ITOps Teams

| Challenge | Observability Solution |

|---|---|

| Meeting SLAs and avoiding penalties | Real-time SLO dashboards, early warning signals |

| Maintaining legacy + cloud systems | Unified telemetry collection across environments |

| Diagnosing performance regressions | Correlated views across infrastructure and apps |

| Optimizing capacity and forecasting | Trend analysis, historical metrics, predictive insights |

| Reducing downtime and manual effort | Automation, alerting, and actionable root cause detection |

By adopting observability, ITOps teams gain not just technical depth, but strategic oversight—empowering them to make faster decisions, reduce operational risk, and contribute directly to the success of digital services.

As part of an observability strategy, Centreon’s open and extensible monitoring platform helps ITOps teams gain full-stack visibility, detect issues proactively, and ensure service continuity across complex environments.

Observability in the Cloud Era

As organizations adopt cloud-native architectures, observability has become an essential pillar for managing complex, ephemeral, and decentralized environments. Traditional monitoring tools—built for static infrastructures—can’t keep pace with the elasticity and dynamism of the cloud.

Cloud-Native Challenges That Demand Observability

The cloud introduces several operational challenges that make observability indispensable:

- Dynamic environments: Containers, Kubernetes pods, and serverless functions are short-lived and rapidly scaling

- Decentralized services: Applications are split into microservices communicating across regions and platforms

- Tool sprawl: Multiple vendors, APIs, and systems create fragmented visibility

- Shared responsibility models: Cloud providers monitor infrastructure, but application performance is still your responsibility.Observability

Observability bridges these visibility gaps by collecting and correlating telemetry across infrastructure, platforms, and applications, regardless of location.

Cloud Observability in Action

Cloud observability combines telemetry from diverse cloud layers to offer end-to-end visibility:

- IaaS (Infrastructure as a Service): Metrics and logs from virtual machines, networks, and storage.

- PaaS (Platform as a Service): Traces from managed databases, containers, and runtime services.

- SaaS and APIs: User experience monitoring and third-party dependency insights.

For organizations using container orchestration platforms like Kubernetes, Centreon’s Kubernetes monitoring integration provides detailed visibility into cluster health, workloads, and resource usage—ensuring cloud-native observability at scale.

Key benefits:

- Detect outages and performance bottlenecks in real time

- Diagnose misconfigurations in autoscaling and load balancing

- Understand cost implications tied to usage and resource efficiency

Learn how to effectively monitor cloud infrastructure with Centreon’s guide, offering foundational insights for building a resilient observability layer.

Observability-as-a-Service (OaaS)

To simplify implementation, many vendors now offer Observability-as-a-Service, allowing organizations to:

- Offload data collection, correlation, and visualization to a managed platform

- Eliminate infrastructure overhead for observability tools

- Leverage built-in machine learning for anomaly detection

Popular OaaS capabilities include:

- Unified dashboards for hybrid/multi-cloud infrastructure

- Pre-integrated data sources and agents

- Real-time alerting and incident correlation

- Support for OpenTelemetry as a standard for telemetry data ingestion

Toward Unified Observability Across Environments

In hybrid and multi-cloud scenarios, a unified observability layer ensures consistent visibility across:

- On-premise data centers

- Public and private cloud workloads

- Container orchestration platforms (Kubernetes, Docker Swarm)

- Serverless environments (AWS Lambda, Azure Functions, Google Cloud Functions)

This unification allows technical profiles like Monitoring Managers, Tech Leads and strategic decision-makers like ITOps Managers to:

- Monitor and compare workloads regardless of environment

- Make informed decisions on resource allocation and cloud strategy

- Ensure continuity of service, even across heterogeneous infrastructure

Observability Platforms and Tools

To fully realize the benefits of observability, organizations turn to observability platforms— that ingest, process, analyze, and visualize telemetry data across complex, distributed systems. These platforms serve as the foundation for observability strategies in modern IT environments.

What Is an Observability Platform?

An observability platform is a centralized system that consolidates metrics, logs, and traces from various sources and enables teams to:

- Visualize data through dashboards and reports

- Set intelligent alerts and anomaly detection

- Correlate events across applications, infrastructure, and services

- Accelerate incident response and root cause analysis

Unlike traditional monitoring tools, observability platforms are telemetry-agnostic and built for scale, flexibility, and intelligence.

Key Features of Modern Observability Platforms

| Feature | Description |

| Multi-signal ingestion | Collects metrics, logs, traces, events, and telemetry data |

| Data correlation | Connects anomalies across components to surface root causes |

| Dashboards & reporting | Unified visualizations for teams and stakeholders |

| AI/ML-powered insights | Predictive alerting, pattern detection, anomaly scoring |

| Integration-ready | Works with DevOps pipelines, ITSM tools, APIs, and cloud providers |

| Scalability & flexibility | Handles large data volumes across hybrid or multi-cloud setups |

Many observability solutions now incorporate AI (Artificial Intelligence) to automate anomaly detection, predict potential issues, and recommend remediations. By learning from historical telemetry patterns, these platforms help reduce noise and surface what really matters to software engineers and SRE teams. AI-driven observability enables code-level details to be interpreted in the broader business context—linking technical anomalies to user impact or revenue loss.

Best Observability Tools (Technology-Agnostic Examples)

Here are categories of observability tools, with typical examples found in many IT stacks:

Open Source Solutions:

- Prometheus (metrics)

- Loki (logs)

- Jaeger / OpenTelemetry (tracing)

Commercial Unified Platforms:

- Datadog

- New Relic

- Dynatrace

- Splunk Observability Cloud

- Elastic Observability

Visualization Tools:

- Grafana (dashboards for metrics/logs/traces)

These tools vary by use case, deployment model (on-premise vs. SaaS), extensibility, and pricing. Some organizations mix open source and commercial tools depending on their needs and maturity.

AI and Machine Learning in Observability

Modern observability platforms rely more than ever on AI to manage the scale and complexity of distributed systems. AI helps process vast volumes of telemetry data—metrics, logs, traces—and extract meaningful patterns in real time.

Thanks to AI, observability tools can:

- Detect anomalies without relying on static thresholds

- Predict incidents before they affect users

- Prioritize alerts based on business impact and severity

- Recommend automated remediation steps to reduce downtime

By integrating AI into observability workflows, IT teams are better equipped to reduce alert fatigue, resolve incidents faster, and improve overall operational resilience. For ITOps managers, AI also contributes to cost control, SLA adherence, and predictive maintenance, making it a powerful asset in modern infrastructure strategies.

As telemetry environments grow more complex, AI becomes essential not just for analysis, but for transforming raw data into actionable intelligence.

Unified Observability: A Single Pane of Glass

As tool sprawl becomes a major pain point, many IT organizations aim for unified observability—a strategy that consolidates observability signals into a single platform:

- Reduces silos and duplicated tools

- Streamlines onboarding and team adoption

- Enhances cross-team visibility and collaboration

This unification aligns directly with strategic goals like cost control, skill development, and process optimization, all key concerns for decision-makers like IT or ITOps Managers.

Application & Infrastructure Observability

True observability requires visibility at every layer of the IT stack—from code-level functions to the physical or virtual infrastructure supporting them. This includes both application observability and infrastructure observability, each offering a distinct but complementary perspective.

Application Observability

Application observability focuses on the behavior and performance of applications, especially in complex architectures like microservices and serverless functions. It aims to ensure that applications meet user expectations for availability, responsiveness, and reliability.

Key capabilities:

- Transaction tracing across microservices

- Latency monitoring for API endpoints

- Error tracking and exception logging

- User experience metrics, such as page load time or app responsiveness

- Code-level diagnostics for root cause isolation

With application observability, development teams can detect regressions early, debug live systems, and optimize performance before issues impact users.

Infrastructure Observability

Infrastructure observability involves monitoring the physical and virtual components that support application workloads. This includes:

- Servers and virtual machines

- Storage systems and databases

- Containers and orchestration tools (e.g., Kubernetes)

- Networks, firewalls, and load balancers

Key metrics and signals include:

- CPU, memory, and disk utilization

- Network throughput and packet loss

- Container lifecycle events

- Infrastructure component health and availability

Infrastructure observability helps IT teams and Tech Leaders maintain system uptime, enforce SLA compliance, and proactively manage capacity and scaling.

Why Combine Application and Infrastructure Observability?

Siloed observability leads to blind spots and delayed incident resolution. By correlating application-level and infrastructure-level telemetry, teams gain:

- A complete picture of the system

- Ability to trace user issues back to infrastructure causes

- Faster incident triage and response

- Improved cross-functional collaboration

This is especially important in hybrid or multi-cloud environments, where ownership and visibility are often distributed.

Use Case Examples

| Use Case | Application Observability Role | Infrastructure Observability Role |

| Slow checkout in e-commerce app | Trace shows bottleneck in payment service | CPU saturation on container host |

| SLA breach for a B2B SaaS platform | Monitoring latency and error rates of key APIs | Network latency on a cloud region |

| Resource overuse after product launch | Spikes in frontend usage revealed via user metrics | Auto-scaling not triggered due to misconfiguration |

| Security incident or suspicious behavior | Unusual login patterns or failed API authentications | Unexpected traffic spikes, port scans |

All IT Team members benefit here:

- Tech Leads need deep technical insights to troubleshoot across the stack.

- ITOps Managers seek system-level observability to guarantee performance, demonstrate compliance, and support digital transformation.

From Observability Data to Actionable Insights

Collecting telemetry is only the first step. The real value of observability lies in transforming raw data into contextualized, actionable insights that empower teams to make informed decisions and resolve issues rapidly.

What Is Telemetry Data?

Telemetry refers to the automated collection and transmission of data from software systems and infrastructure. It is the fuel that powers observability.

Telemetry includes:

- Metrics: Quantitative data over time (e.g., request rate, memory usage)

- Logs: Human-readable records of events (e.g., errors, transactions)

- Traces: Event timelines showing request flow through services

- Events: State changes or conditions triggered within systems

- Custom signals: Business-specific KPIs, feature usage, etc.

Telemetry is the “raw signal.” Observability transforms that signal into understanding.

From Raw Data to Insight: The Observability Pipeline

The journey from telemetry to insight typically involves several stages:

- Ingestion

- Data is collected from applications, infrastructure, containers, APIs, etc.

- Often through agents, SDKs, or APIs (e.g., OpenTelemetry)

- Normalization & Enrichment

- Logs and metrics are structured, tagged, and timestamped

- Context is added (e.g., service name, deployment version, user ID)

- Correlation & Contextualization

- Metrics, logs, and traces are connected across services and layers

- Enables root cause analysis through pattern matching and dependency mapping

- Visualization & Reporting

- Dashboards and heatmaps display real-time system health

- SLO/SLI performance can be tracked for compliance

- Alerting & Automation

- Rules or AI/ML models detect anomalies or threshold violations

- Trigger alerts, tickets, auto-remediation workflows

The Power of Actionable Insights

What distinguishes observability from raw monitoring is the ability to act on what’s observed. Actionable insights mean:

- Fewer false positives: Alerts triggered only when context warrants action

- Shorter MTTR: Fast root cause identification and incident resolution

- Better resource planning: Trend analysis informs scaling and capacity

- Improved user experience: Real-time awareness of performance issues

For Tech leads, insights reduce investigation time and manual diagnostics.

For ITOps Managers, they support KPI tracking, SLA reporting, and IT cost optimization.

Observability becomes even more valuable when telemetry is interpreted within its business context—connecting infrastructure behavior to customer outcomes, financial performance, or service-level commitments. For example, a spike in database latency isn’t just a technical concern; it’s a potential obstacle to successful transactions or SLA compliance. Contextualizing observability data enables both technical teams and business leaders to align priorities and actions.

Example: Observability Insight in Practice

Imagine an e-commerce platform experiences increased checkout failures:

- Metric: Spike in failed transactions per minute

- Log: “Payment gateway timeout” events appear in backend logs

- Trace: Slow span on the payment service API call

- Insight: Recent config change to retry timeout is causing service overload

The observability stack enables a fast, data-backed diagnosis and rollback or fix within minutes, preserving both user experience and revenue.

By turning telemetry into actionable insights, observability gives teams a shared reality—one that’s rooted in data and aligned with business outcomes.

Best Practices for Building Observability

Implementing observability is not just about deploying tools—it’s about establishing a systematic framework that aligns with both technical and business goals. Successful observability practices are built on cross-functional alignment, data consistency, and process maturity.

Here are the key best practices for building and scaling observability in modern IT environments:

1. Design for Observability from the Start

- Embed observability into system architecture and software development lifecycles (SDLC).

- Instrument applications with OpenTelemetry or similar standards.

- Treat telemetry as a first-class citizen in DevOps pipelines.

Systems designed for observability are easier to operate, debug, and evolve.

2. Embrace the Three Pillars Holistically

- Collect and correlate metrics, logs, and traces across environments.

- Avoid pillar silos—integrate data into unified views.

- Don’t over-rely on one pillar (e.g., metrics-only).

A balanced telemetry strategy supports comprehensive diagnostics.

3. Build a Cross-Team Observability Culture

- Encourage collaboration between Dev, Ops, and SRE teams.

- Establish shared dashboards, alerts, and incident channels.

- Create runbooks and postmortems that reference telemetry insights.

Observability works best when teams share responsibility for system health.

4. Prioritize Business-Centric KPIs

- Link observability data to business outcomes (e.g., conversion rates, SLA compliance).

- Track Service Level Indicators (SLIs) that reflect user experience.

- Use telemetry to improve customer-facing metrics, not just infrastructure stats.

Aligning observability with business value is key to executive support and budget.

5. Start Small, Scale Smart

- Begin with critical services and expand iteratively.

- Focus on high-value signals and actionable alerts.

- Don’t overload teams with unnecessary data or noise.

Clarity and focus beat complexity and overload.

6. Standardize Tags, Labels, and Metadata

- Enforce consistent naming conventions across environments.

- Use labels to organize telemetry by service, region, team, etc.

- Helps with filtering, searching, correlation, and cost attribution.

Consistency enables faster investigation and easier scaling.

7. Leverage Automation and Machine Learning

- Use AI/ML for anomaly detection, noise suppression, and alert prioritization.

- Automate repetitive tasks: alert routing, ticket creation, even remediation.

- Reduce operational toil and increase agility.

Automation extends the value of observability and supports IT efficiency.

8. Monitor the Observability Itself

- Keep observability systems highly available and secure.

- Ensure telemetry pipelines are monitored and failover-capable.

- Audit data integrity and latency periodically.

Observability tools are mission-critical and must be observable too.

A Maturity Framework for Observability

Organizations can assess their observability capability using a maturity model:

| Level | Characteristics |

| Basic | Isolated monitoring, limited logs, no tracing |

| Developing | Manual correlation, dashboard use, beginning standardization |

| Proficient | Full telemetry, unified views, automated alerts |

| Advanced | Cross-team adoption, business KPIs, ML-driven insights |

| Optimized | Fully integrated into strategy, predictive insights, self-healing systems |

This framework helps IT/ITOps Managers and business leaders track progress and justify investments in observability capabilities.

Observability: Examples and Use Cases

Observability is not a theoretical concept—it is a practical capability that enables organizations to maintain system reliability, improve performance, and support digital transformation. Below are real-world use cases and examples that demonstrate its value across IT and business contexts.

1. E-Commerce Platform: Checkout Failures

Scenario: A major online retailer observes a spike in abandoned shopping carts.

- Telemetry Insight:

- Metrics reveal a drop in successful payment transactions.

- Logs show increased timeout errors from the payment service.

- Traces identify a dependency bottleneck introduced in a recent deployment.

- Outcome:

- Engineers quickly identify a misconfigured API timeout.

- A rollback is initiated and sales recovery begins within minutes.

Observability allows rapid triage, minimizes revenue loss, and protects user experience.

2. Financial Services: SLA Violation Detection

Scenario: A banking application must maintain 99.99% availability for core services.

- Telemetry Insight:

- SLO dashboards indicate response times breaching thresholds.

- Root cause: degraded performance in database connections due to a failed patch.

- Outcome:

- Observability enables early alerting and automated ticket generation.

- SLA breach is documented, and a compensating control is applied proactively.

Helps ITOps managers demonstrate regulatory compliance and SLA assurance.

3. SaaS Provider: Optimizing Cloud Spend

Scenario: A SaaS company seeks to reduce cloud costs while maintaining performance.

- Telemetry Insight:

- Metrics and logs show resource over-provisioning across multiple Kubernetes clusters.

- Tracing reveals underutilized services with minimal user interaction.

- Outcome:

- Teams downscale resources during off-peak hours using autoscaling policies.

- Cost savings are quantified and reported to finance and operations.

Observability supports both technical performance and business efficiency.

4. Manufacturing Company: Preventive Maintenance

Scenario: A manufacturer runs IoT-connected machines that must operate 24/7.

- Telemetry Insight:

- Metrics highlight early signs of thermal stress on motor components.

- Logs show increasing error rates in device firmware.

- Outcome:

- Maintenance is scheduled before equipment failure.

- Avoided unplanned downtime translates into high ROI.

Observability enables predictive insights and operational continuity.

5. Public Sector IT: Digital Service Monitoring

Scenario: A government agency provides online citizen services with strict availability requirements.

- Telemetry Insight:

- Trace data shows performance lags during login and file upload operations.

- Correlated logs and metrics highlight storage latency and expired SSL certificates.

- Outcome:

- The team remediates issues quickly and shares observability reports with stakeholders.

- User trust is maintained and internal SLAs are upheld.

Observability facilitates transparency and mission-critical service assurance.

Summary of Use Cases by Domain

| Industry | Observability Application |

| E-Commerce | Transaction diagnostics, revenue protection |

| Financial Services | SLA tracking, compliance reporting |

| SaaS & Tech | Cost optimization, DevOps acceleration |

| Manufacturing | Asset performance, predictive maintenance |

| Public Sector | Digital service reliability, stakeholder reporting |

These examples show how observability is not just about data—it’s about using that data to make faster, smarter decisions that protect the business, optimize IT resources, and improve customer satisfaction.

Observability Challenges and Future Trends

As observability matures from a niche practice to a mainstream discipline, organizations face new challenges and must prepare for emerging trends that will shape its future. Understanding these dynamics is critical for building a scalable and future-proof observability strategy.

Common Challenges in Observability Adoption

Despite its promise, observability initiatives often face roadblocks:

1. Tool Sprawl and Data Fragmentation

- Many teams use disparate monitoring, logging, and tracing tools without integration.

- Leads to visibility gaps and siloed diagnostics.

2. Alert Fatigue

- Poorly tuned systems generate excessive noise.

- Teams may overlook real incidents due to overwhelming alerts.

3. Skill Gaps and Team Silos

- Lack of training in telemetry standards (e.g., OpenTelemetry).

- Difficulty aligning Dev, Ops, and SRE on a unified strategy.

4. Cost and Data Volume

- Storing high-cardinality telemetry at scale can be expensive.

- Balancing observability depth with budget is a common concern.

5. Resistance to Change

- Fear of operational disruption.

- Risk aversion around new tools and architectural shifts.

ITOps Managers must navigate these issues strategically to deliver consistent value and adoption across the organization.

Key Trends Shaping the Future of Observability

1. Rise of Open Standards (e.g., OpenTelemetry)

- Ensures vendor-neutral instrumentation and portability

- Promotes consistency across tools and environments

2. AI-Powered Observability

- Anomaly detection and root cause analysis powered by machine learning

- Alert prioritization and noise reduction via intelligent algorithms

3. Shift-Left Observability

- Bringing telemetry earlier into development pipelines

- Enables pre-production validation and performance optimization

4. Observability-as-Code

- Define telemetry configuration using code (YAML, Terraform, etc.)

- Integrates observability into CI/CD workflows

5. Unified Observability Platforms

- Consolidation of tools into end-to-end platforms

- Emphasis on correlation across metrics, logs, traces, and events

6. Observability for Business Insights

- Bridging the gap between technical telemetry and business KPIs

- Enables teams to measure user experience, conversion rates, cost-to-serve, etc.

Strategic Considerations for the Future

| Focus Area | Strategic Objective |

| Integration | Eliminate silos, reduce tool overlap |

| Automation | Scale incident response, reduce toil |

| Flexibility | Support hybrid, cloud, and edge workloads |

| Security & Compliance | Audit trails, data governance, encryption |

| Budget Efficiency | Optimize data retention and ingestion costs |

For tech leads, the future lies in simplified, automated, and developer-friendly observability.

For IT or ITOps managers, it means scalability, cost control, and strategic value from observability investments.

How Does Centreon Contribute to Observability?

Centreon plays a critical role within the observability ecosystem by delivering deep, real-time visibility into IT infrastructure, and by integrating seamlessly with observability stacks.

Centreon offers organizations—especially those managing complex, hybrid IT environments—a robust foundation for observability through its:

- Extensive monitoring capabilities

- Open architecture

- Integration with telemetry pipelines

- Powerful dashboards and alerting

Centreon Key Capabilities That Support Observability

| Capability | Observability Value |

| Comprehensive infrastructure monitoring |

Full-stack visibility: servers, networks, cloud, containers |

| Real-time alerting and thresholds | Early anomaly detection and incident prevention |

| Auto-discovery and templating | Faster instrumentation and observability at scale |

| Event correlation and root cause views | Faster MTTR and contextual understanding of system issues |

| Custom dashboards and SLO visualization | Clear reporting for operational and business stakeholders |

Centreon enables IT teams to observe 100% of their infrastructure, eliminate blind spots, and detect performance degradations early.

Centreon complements other observability solutions by contributing reliable, structured monitoring data. This makes it easier for organizations and companies to unify their tooling into a single platform that blends infrastructure metrics with application insights, enabling a more complete and business-aligned observability strategy.

As Centreon highlights, there is no business observability stack without connected monitoring, and its platform delivers that foundational connectivity layer.

Seamless Integration with Observability Stacks

Centreon is designed for interoperability, allowing organizations to enrich their observability practice through integration with:

- Log management systems (e.g., ELK stack, Splunk)

- Tracing frameworks (e.g., OpenTelemetry collectors)

- Cloud-native platforms (e.g., AWS CloudWatch, Azure Monitor)

- ITSM and incident response tools (e.g., ServiceNow, PagerDuty)

These integrations ensure that Centreon contributes structured, normalized monitoring data that can be ingested into broader observability pipelines.

Explore:

👉 Use Case – Centreon and Observability 👉 Centreon Feature Set – Extensive Integrations

Centreon’s Value for Tech Leads and ITOps Managers

To understand how Centreon fits into the broader observability ecosystem and whether it qualifies as such, see this deep dive on whether Centreon is an observability solution.

- For Tech Leads:

Centreon offers technical depth and monitoring precision, enabling him to instrument systems efficiently, reduce alert fatigue, and maintain visibility across diverse environments. - For ITOps Managers:

Centreon contributes to strategic goals such as SLA assurance, IT optimization, and cost-efficient operations—providing a reliable layer within an observability-driven organization.

Ready to Extend Your Observability?

Centreon helps IT teams monitor anything, anywhere, and can be a powerful building block in your observability architecture.

Next steps:

- Explore how Centreon enables modern observability tailored for hybrid IT.

- Learn more about Centreon’s capabilities.

- Get a personalized demo of what Centreon is about and how it could work for you.

FAQ – Frequently Asked Questions About Observability

Have more questions about observability? You’re not alone. Below, we’ve compiled answers to some of the most common questions IT professionals, developers, and ITOps leaders ask when exploring observability strategies, tools, and best practices.

What is observability in software?

Observability in software refers to the ability to understand the internal workings of a software system by examining its external outputs, such as logs, metrics, and traces. It enables developers and IT teams to detect bugs, monitor performance, and debug production issues without needing direct access to the code or manual instrumentation during runtime.

What is observability in DevOps?

In DevOps, observability supports continuous delivery and operational excellence by providing real-time insight into how systems behave in production. It enables teams to shift-left performance monitoring, catch regressions early, and support faster incident response, all while promoting collaboration between development and operations teams.

What is cloud observability?

Cloud observability refers to the ability to monitor, analyze, and gain insights into applications and infrastructure running in cloud environments—whether public, private, or hybrid. It includes collecting and correlating telemetry from containers, serverless functions, virtual machines, and cloud-native services to ensure performance, reliability, and compliance across distributed systems.

What is application observability?

Application observability is the practice of monitoring the internal state and performance of software applications. It focuses on telemetry related to code execution, API latency, user behavior, and error tracking. With application observability, teams can trace how individual requests behave, identify bottlenecks, and optimize the end-user experience.

How to implement observability?

Implementing observability starts by instrumenting systems to collect telemetry data—logs, metrics, and traces—using agents, SDKs, or open standards like OpenTelemetry. Next, centralize this data in a unified platform, define service-level indicators (SLIs), set alerts, and create dashboards for visualization. Finally, promote a culture of observability across teams to ensure adoption and collaboration.

What are observability tools?

Observability tools are platforms and services used to collect, analyze, and visualize telemetry data. They typically support the ingestion of metrics, logs, and traces and offer capabilities like alerting, dashboarding, root cause analysis, and correlation across distributed systems. Examples include open source tools like Prometheus and Jaeger, or commercial platforms like Datadog, New Relic, and Centreon.

How does observability work?

Observability works by collecting telemetry data from software and infrastructure components, enriching it with contextual metadata, and analyzing it through dashboards, alerts, or automated systems. This process allows teams to understand system health, detect anomalies, correlate events, and resolve issues faster. The richer and more structured the telemetry, the more effective observability becomes.

Pages linked on this

Ready to see how Centreon can transform your business?

Keep informed on our latest news